Subterranean survival

Now throw into the mix inspiration from a book entitled An Immense World: How Animal Senses Reveal the Hidden Realms Around Us (Ed Yong), and Bjørn’s thinking gets even more fascinating. The book explores how animals perceive the world differently from humans, and a specific story about the star-nosed mole resonated with him. The book describes “an intelligent hunter and explorer that navigates its world not through sight, but through touch. This creature, living in darkness, has developed a unique way of ‘seeing’ its environment using its star-shaped snout.” This story illustrated to Bjørn “how different forms of intelligence perceive the world in ways that are almost unimaginable to us.”

Challenge your perceptions

The fallout from all of this was the design and development of Paragraphica, a context-to-image camera that uses data, not light, to create images, and which offers “a different way of seeing the world, one that is based on data and AI interpretation rather than human perception.” Bjørn feels that it’s a tool that falls “somewhere between critical art and consumer product,” allowing users to explore the ‘dreams’ of AI as he sees it, “providing a glimpse into a form of intelligence that is fundamentally different from our own.”

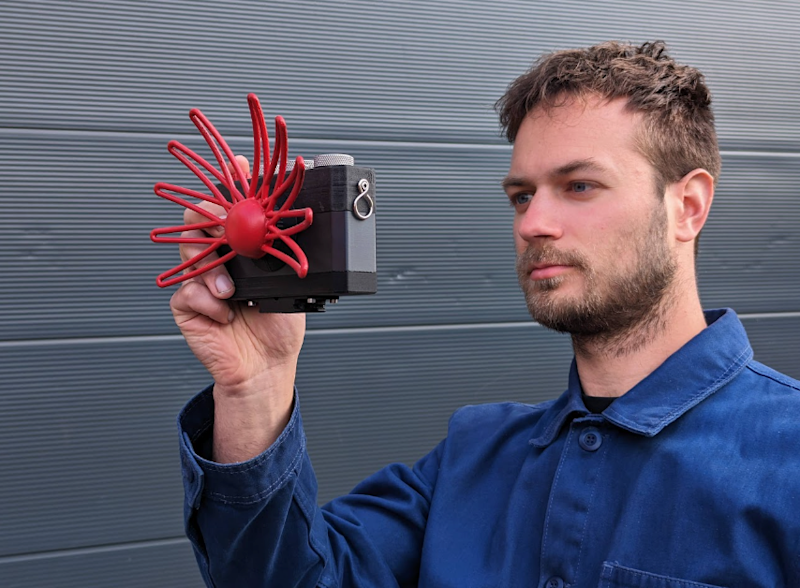

Perhaps the most striking thing about the camera is the design of the cover on the front, where typically on a camera you’d find a lens, and we have the star-nosed mole to thank for that. Nature plays a key part in a lot of Bjørn’s designs, and this small burrowing mammal’s antenna-like snout was “the perfect inspiration for the camera.” In addition, Bjørn wanted the front of the camera to evoke a “data collector”, such as a radio antenna or satellite dish.

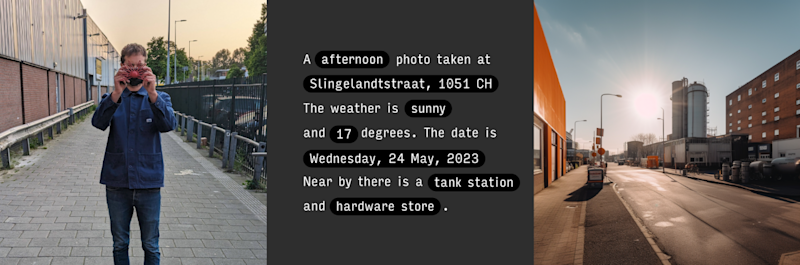

Bjørn’s camera works by collecting data related to its location using open APIs, including OpenWeatherMap and Mapbox. This data is used to compose a paragraph (hence the name of the camera) that details a representation of the current place and moment, and this description is then used as the AI image prompt.

“In a way, you can think of this process as filling in the blanks of a template paragraph,” Bjørn suggests. “I then send this paragraph as a prompt for a text-to-image AI model to convert the paragraph into a ‘photo’.” Some of the resulting images have been surprisingly accurate, “but they never look like the real place – it helps to think of the resulting ‘photo’ as a data visualisation.”

Bjørn wrote the software for the project, which uses a mix of a local Python script to simulate key presses, and a web application running in a browser. The web app was made using the Noodl platform, “and essentially gathers key parameters from the web, like weather, date, street name, time, and nearby places, and recomposes them into a template description.”

Dial development

A Raspberry Pi 4 powers the device from within a 3D-printed shell. “Using a [Raspberry] Pi for the project gave me the freedom to prototype fast and explore some ideas for how it would work,” Bjørn explains. “And I also had a Raspberry Pi 4 with a screen already attached to it laying in my workshop, so this felt like a good starting point.”

As with most projects that we showcase, there were challenges to overcome during development, including 3D-modelling and 3D-printing the unique mole-inspired casing, and setting up the code and API pipeline for Raspberry Pi.

Bjørn has also been tweaking the dials on the camera, which enable the user to control the data and AI parameters, thus influencing the final ‘photo’. “I have recently updated the two dials to affect the photo styles and years,” he explains. “Changing the year the photo should be taken at is particularly fun, as you get to picture your street in the 1960s, or 2077 into the future.”

View-finder

Bjørn describes the feedback received thus far as “mixed”, with the area of AI igniting a range of reactions and opinions. Some people saw it as a product, but “struggled to connect it to a problem that needed to be solved.” Others have understood the concept and absolutely loved it.

However, Bjørn feels that it “defiantly shows that the concept and manifestation hit a sensitive point.” He’s clear that his creation was intended to highlight and encourage discussion around AI perception, along with “the increasing use of AI in creative domains and technologies we use daily to capture reality. I think it did the job perfectly.