Raspberry Pi computers are famously cheap and cheerful. Great for playing around with and the odd little project, right? Well, sometimes.

However, our little friend is a surprisingly powerful computer and when you get lots of them working together, amazing things can happen.

The concept of computer ‘clusters’ (many computers working together as one) is nothing new, but when you have a device as affordable as Raspberry Pi, you can start to rival much more expensive systems by using several in parallel. Here, we’ll learn how to make a cluster computer from a lot of little computers.

You'll need

Four USB C cables

Four Ethernet cables

Cluster assemble!

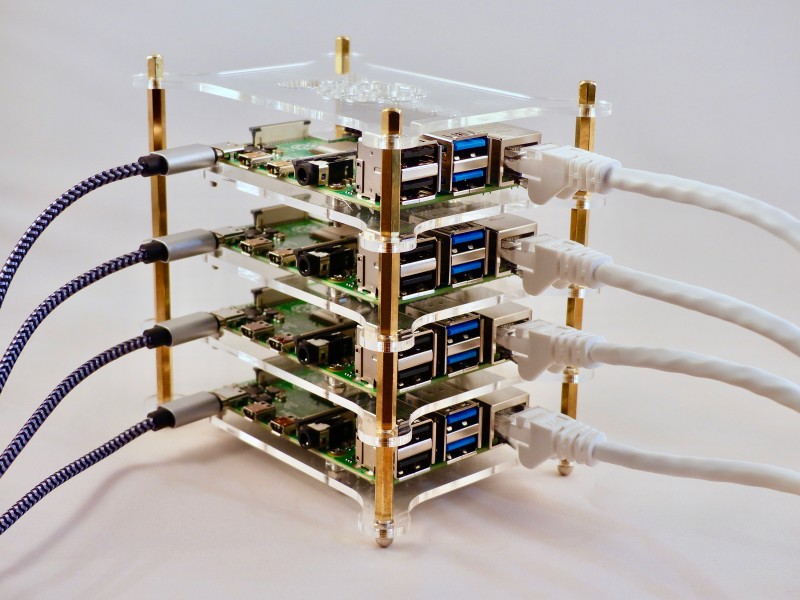

A cluster of Raspberry Pi computers can start with as little as two and grow into hundreds. For our project, we’re starting with a modest four. Each one, known as a ‘node’, will carry out part of our task for us and they all work in parallel to produce the result a lot quicker than a single node ever could. Some nice ‘cluster cases’ are available, and we start here by assembling our Raspberry Pi 4B computers into a four-berth chassis. Many different configurations are available, including fan cooling.

Power up

Consider the power requirements for your cluster. With our four nodes it’s not going to be ideal to have four PSUs driving them. As well as being ugly, it’s inefficient. Instead, track down a good-quality, powerful multi-port USB charger that is capable of powering your chosen number of computers. Then all you need are the cables to link them and you’re using a single mains socket. USB units are available that can handle eight Raspberry Pi computers without breaking a sweat. Do be careful of the higher demands of Raspberry Pi 4.

Get talking

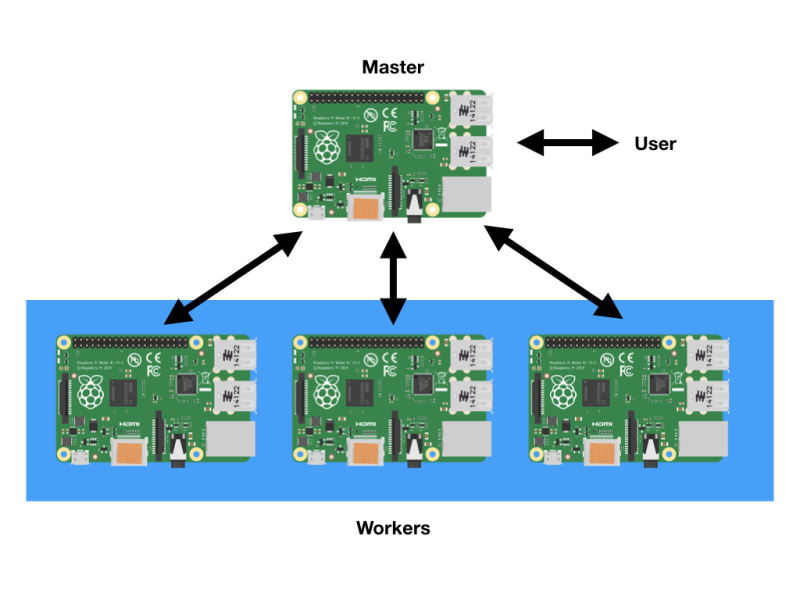

A cluster works by communication. A ‘master’ node is in charge of the cluster and the ‘workers’ are told what to do and to report back the results on demand. To achieve this we’re using wired Ethernet on a dedicated network. It’s not essential to do it this way, but for data-intensive applications it’s advisable for the cluster to have its own private link-up so it can exchange instructions without being hampered by wireless LAN or other network traffic. So, in addition to wireless LAN, we’re linking each node to an isolated Gigabit Ethernet switch.

Raspbian ripple

We’re going to access each node using wireless LAN so the Ethernet port is available for cluster work. For each ‘node’, burn Raspbian Buster Lite to a microSD card, boot it up, and make sure it’s up to date with sudo apt -y update && sudo apt -y upgrade. Then run sudo raspi-config and perform the following steps:

• Change the ‘pi’ user password.

• Under ‘Networking’, change the hostname to nodeX, replacing X with a unique number (node1, node2 etc.). Node1 will be our ‘master’.

• Enable WiFi if desired.

• Exit and reboot when prompted.

Get a backbone

The wired Ethernet link is known as the cluster’s ‘backbone’. You need to manually enable the backbone, as there is no DHCP server to help. We’re going to use the 10.0.0.0 subnet. If your regular network uses this, choose something different like 192.168.10.0. For each node, from the command line, edit the network configuration:

sudo nano /etc/dhcpcd.conf Go to the end of file and add the following:

interface eth0

static ip_address=10.0.0.1/24

For each node, replace the last digit of ’10.0.0.1’ with a new unique value: 10.0.0.2, 10.0.0.3, and so on. Reboot each node as you go. You should be able to ping each node – for example, from 10.0.0.1:

ping 10.0.0.2

Brand new key

For the cluster to work, each worker node needs to be able to talk to the master node without needing a password to log in. To do this, we use SSH keys. This can be a little laborious, but only needs to be done once. On each node, run the following:

ssh-keygen -t rsa

This creates a unique digital ‘identity’ (and key pairs) for the computer. You’ll be asked a few questions; just press RETURN for each one and do not create a passphrase when asked. Next, tell the master (node1, 10.0.0.1 in our setup) about the keys by running the following on every other node:

ssh-copy-id 10.0.0.1

Finally, do the same on the master node (node1, 10.0.0.1) and copy its key to every other node in the cluster.

Install MPI

The magic that makes our cluster work is MPI (Message Passing Interface). This protocol allows multiple computers to delegate tasks amongst themselves and respond with results. We’ll install MPI on each node of our cluster and, at the same time, install the Python bindings that allow us to take advantage of its magical powers.

On each node, run the following:

sudo apt install mpich python3-mpi4py Once complete, test MPI is working on each node

mpiexec -n 1 hostname

You should just get the name of the node echoed back at you. The -n means ‘how many nodes to run this on’. If you say one, it’s always the local machine.

Let’s get together

Time for our first cluster operation. From node1 (10.0.0.1), issue the following command:

mpiexec -n 4 --hosts 10.0.0.1,10.0.0.2,10.0.0.2,10.0.0.4 hostname

We’re asking the master supervisor process, mpiexec, to start four processes (-n 4), one on each host. If you’re not using four hosts, or are using different IP addresses, you’ll need to change this as needed. The command hostname just echoes the node’s name, so if all is well, you’ll get a list of the four members of the cluster. You’ve just done a bit of parallel computing!

Is a cluster of one still a cluster?

Now we’ve confirmed the cluster is operational, let’s put it to work. The prime.py program is a simple script that identifies prime numbers. Enter the code shown in the listing (or download it from magpi.cc/EWASJx) and save it on node1 (10.0.0.1). The code takes a single argument, the maximum number to reach before stopping, and will return how many prime numbers were identified during the run. Start by testing it on the master node:

mpiexec -n 1 python3 prime.py 1000

Translation: ‘Run a single instance on the local node that runs prime.py testing for prime numbers up to 1000.’

This should run pretty quickly, probably well under a second, and find 168 primes.

Multiplicity

In order for the cluster to work, each node needs to have an identical copy of the script we need to run, and in the same place. So, copy the same script to each node. Assuming the file is in your home directory, the quick way to do this is (from node1):

scp ~/prime.py 10.0.0.x:

Replace x with the number of the node required: scp (secure copy) will copy the script to each node. You can check this has worked by going to each node and running the same command we did on node1. Once you are finished, we are ready to start some real cluster computing.

Compute!

To start the supercomputer, run this command from the master (node1):

mpiexec -n 4 --host 10.0.0.1,10.0.0.2,10.0.0.3,10.0.0.4 python3 prime.py 100000

Each node gets a ‘rank’: a unique ID. The master is always 0. This is used in the script to allocate which range of numbers each node processes, so no node checks the same number for ‘primeness’. When complete, each node reports back to the master detailing the primes found. This is known as ‘gathering’. Once complete, the master pulls all the data together and reports the result. In more advanced applications, different data sets can be allocated to the nodes by the master (‘scattering’).

Final scores

You may have noticed we asked for all the primes up to 1000 in the previous example. This isn’t a great test as it is so quick to complete. 100,000 takes a little longer. In our tests, we saw that a single node took 238.35 seconds, but a four-node cluster managed it in 49.58 seconds – nearly five times faster!

Cluster computing isn’t just about crunching numbers. Fault-tolerance and load-balancing are other concepts well worth investigating. Some cluster types act as single web servers and keep working, even if you unplug all the Raspberry Pi computers in the cluster bar one.

Top tip: Load balancing

Clusters are also useful for acting as a single web server and sharing traffic, such as Mythic Beast’s Raspberry Pi web servers.

Top tip: Fault tolerance

Certain cluster types, such as Docker Swarm or Kubernetes, allow individual nodes to fail without disrupting service.